Inference-as-a-service for offloading AI computing

Project: SuperSONIC

Modern scientific experiments generate sheer amounts of data, placing growing demands on computing resources that traditional processors can no longer meet.

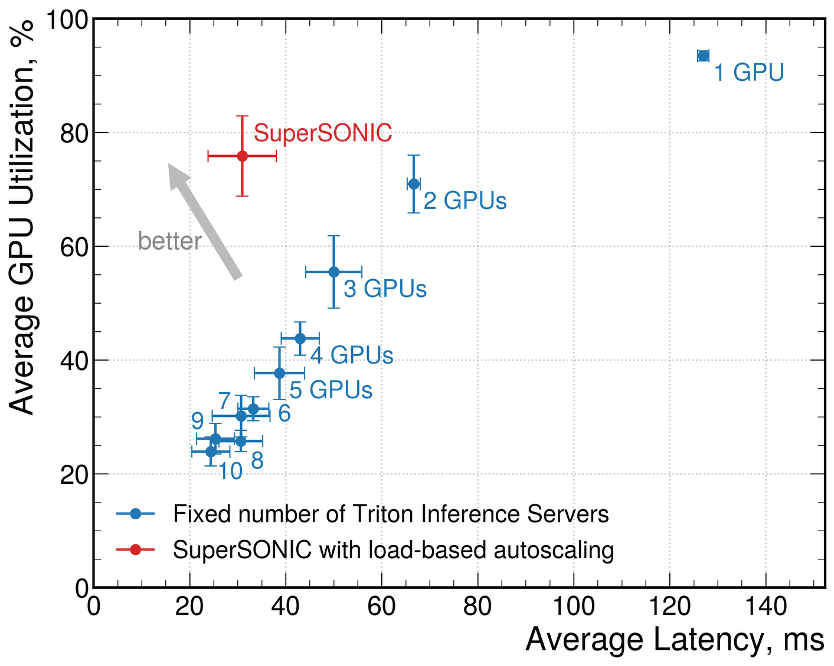

The SuperSONIC project addresses this challenge by creating a shared server infrastructure that seamlessly integrates advanced compute accelerators — such as graphic processing units, or GPUs, neural processing units, or NPUs, field-programmable gate arrays , FPGAs, and other cutting-edge technology — designed for computationally intensive tasks, especially those involving machine learning. Designed for reuse across multiple scientific experiments, the system offers a scalable solution to resource allocation challenges and helps optimize data processing workflows.